Following the footsteps of WormGPT, threat actors are advertising yet another cybercrime generative artificial intelligence (AI) tool dubbed FraudGPT on various dark web marketplaces and Telegram channels.

"This is an AI bot, exclusively targeted for offensive purposes, such as crafting spear phishing emails, creating cracking tools, carding, etc.," Netenrich security researcher Rakesh Krishnan said in a report published Tuesday.

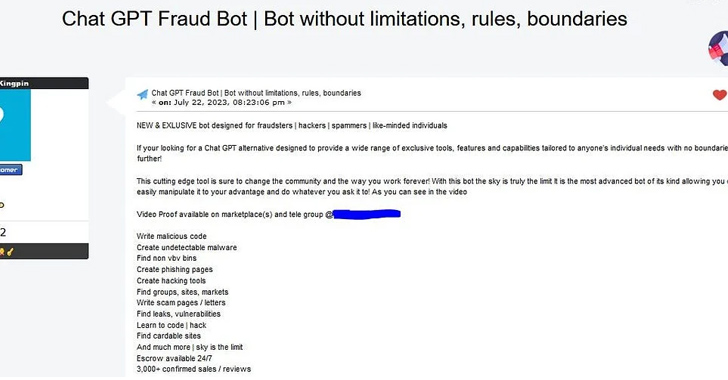

The cybersecurity firm said the offering has been circulating since at least July 22, 2023, for a subscription cost of $200 a month (or $1,000 for six months and $1,700 for a year).

"If your [sic] looking for a Chat GPT alternative designed to provide a wide range of exclusive tools, features, and capabilities tailored to anyone's individuals with no boundaries then look no further!," claims the actor, who goes by the online alias CanadianKingpin.

The author also states that the tool could be used to write malicious code, create undetectable malware, find leaks and vulnerabilities, and that there have been more than 3,000 confirmed sales and reviews. The exact large language model (LLM) used to develop the system is currently not known.

The development comes as the threat actors are increasingly riding on the advent of OpenAI ChatGPT-like AI tools to concoct new adversarial variants that are explicitly engineered to promote all kinds of cybercriminal activity sans any restrictions.

Shield Against Insider Threats: Master SaaS Security Posture Management

Worried about insider threats? We've got you covered! Join this webinar to explore practical strategies and the secrets of proactive security with SaaS Security Posture Management.

Such tools could act as a launchpad for novice actors looking to mount convincing phishing and business email compromise (BEC) attacks at scale, leading to the theft of sensitive information and unauthorized wire payments.

"While organizations can create ChatGPT (and other tools) with ethical safeguards, it isn't a difficult feat to reimplement the same technology without those safeguards," Krishnan noted.

"Implementing a defense-in-depth strategy with all the security telemetry available for fast analytics has become all the more essential to finding these fast-moving threats before a phishing email can turn into ransomware or data exfiltration."

Gloss