Meta AI Introduces A New AI Technology Called ‘Few-Shot Learner (FSL)’ To Tackle Harmful Content

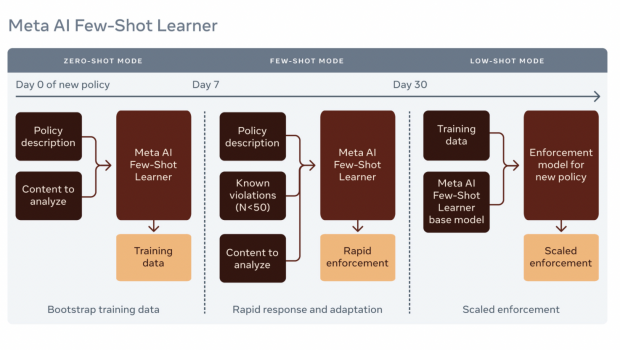

For the training of AI models, a massive number of labeled data points or examples are required. Typically, the number of samples needed is tens of thousands to millions. Collection and labeling of these data can take several months. This manual collection and labeling delay the deployment of AI systems that can detect new types of harmful content over different social media platforms. To handle this issue, Meta has deployed a relatively new AI model called “Few-Shot Learner” (FSL) such that harmful contents can be detected even if enough labeled data is not available.

Meta’s new FSL deployment is a step towards developing more generalized AI models that will require very few to almost no labeled data for training. FSL falls under the category of an emerging field in AI called meta-learning, where the aim is “learning to learn” rather than “learning patterns” as done in traditional AI models. The FSL is first trained over generic natural language examples, acting as the training set. Next, the model is trained with new policy texts explaining the harmful target contents and policy-violating content that has been labeled in the past, which acts as a support set. Meta has reported that their FSL outperforms several existing state-of-the-art FSL methods by 12% on an average over various systematic evaluation schemes. For further details, one can consult Meta’s research paper.

Due to the pre-training on generic languages, the FSL model can learn the policy texts implicitly and quickly take the necessary actions to eradicate harmful content over social media platforms. Meta AI’s few-shot learner deployment over Facebook and Instagram has been highly successful. It has been able to stop the spread of newly evolving harmful content like hate speech and posts creating vaccine hesitancy. Meta is working towards improving these AI models to create generalized models that could go through and comprehend pages of policy texts and implicitly learn how to enforce them.

Apart from application in the detection and removal of harmful contents, FSL can play a massive role in accelerating research in the field of computer vision, specifically in tasks like action localization, character recognition, video motion tracking. In the field of natural language processing (NLP), it can be helpful in sentiment classification, sentence completion, and translation. Another major application of FSLs can be in voice cloning, which is becoming more and more critical with the advent of new digital assistance products in the market.

Meta plans to incorporate FSL and other meta-learning strategies into their past and upcoming policy enforcing AI models, enabling the development of a shared knowledge backbone to deal with various types of violations. In the future, FSLs are likely to be used to assist policy creators and shorten the enforcement time by orders of magnitude. Meta plans to grow their investment in zero-shot and few-shot learning research to develop technologies that can go beyond the traditional understanding of contents and infer the behavioral and conversational contexts behind them. These early milestones point towards a shift in AI/DL research interests from traditional pattern learning models to generalized systems capable of learning multiple tasks, demanding little labeling efforts, and requiring a shorter deployment period.

Paper: https://arxiv.org/pdf/2104.14690.pdf

Reference: https://ai.facebook.com/blog/harmful-content-can-evolve-quickly-our-new-ai-system-adapts-to-tackle-it/

Suggested

Gloss