C3.ai becomes the first to debut a generative A.I. search tool

https://www.ispeech.org/text.to.speech

The generative A.I. future is coming very fast. It is going to be highly disruptive—in both good ways and bad. And we are definitely not ready.

These points were hammered home to me over the past couple of days in conversations with executives from three different companies.

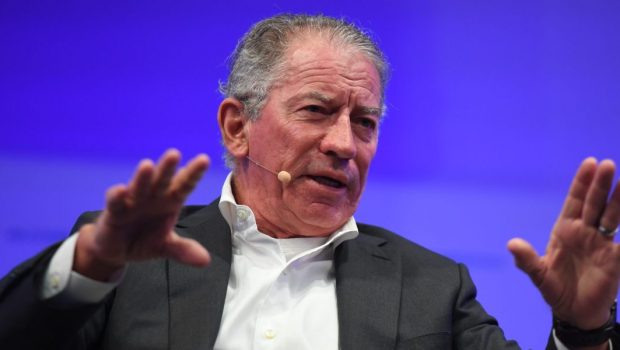

First, I spoke earlier today with Tom Siebel, the billionaire co-founder and CEO of C3.ai. He was briefing me on a new enterprise search tool C3.ai just announced that is powered by the same kinds of large language models that underpin OpenAI’s ChatGPT. But unlike ChatGPT, C3.ai’s enterprise search bar retrieves answers from within a specific organization’s own knowledge base and can then both summarize that information into a concise paragraphs and provide citations to the original documents where it found that information. What’s more, the new search tool can generate analytics, including charts and graphs, on the fly.

News of the new generative A.I.-powered search tool sent C3.ai’s stock soaring—up 27% at one point during the day.

In a hypothetical example Siebel showed me, a manager in the U.K.’s National Health Service could type a simple question into the search bar: What is the trend for outpatient procedures completed daily by specialty across the NHS? And within about a second, the search engine has retrieved information from multiple databases and created a pie chart showing a snapshot with live data on the percentage of procedures grouped by specialty as well as a fever chart showing how each of those numbers is changing over time.

The key here is that those charts and graphs didn’t exist anywhere in the NHS’s vast corpus of documents; they were generated by the A.I. in response to a natural language query. The manager can also see a page ranking of the documents that contributed to those charts and drill down into each of those documents with a simple mouse click. C3.ai has also built filters so a user can only retrieve data from the knowledge base that they are permitted to see—a key requirement for data privacy and national security for many of the government and financial services customers C3.ai works with.

“I believe this is going to fundamentally change the human computer interaction model for enterprise applications,” Siebel says. “This is a genuinely game changing event.” He points out that everyone knows how to type in a search query. It requires no special training in how to use complex software. And C3.ai will begin rolling it out to customers that include the U.S. Department of Defense, the U.S. intelligence community, the U.S. Air Force, Koch Industries, and Shell Oil, with a general release scheduled for March.

Nikhil Krishnan, the chief technology officer for products at C3.ai, tells me that under the hood, right now most of the natural language processing is being driven by a language model Google developed and open-sourced called FLAN T5. He says this has some advantages over OpenAI’s GPT models, not just in terms of cost, but also because it is small enough to run on almost any enterprise’s network. GPT is too big to use for most customers, Krishnan says.

Okay, so that’s pretty game changing. But in some ways, a system I had seen the day before seemed even more potentially disruptive. On Monday, I had coffee with Tariq Rauf, the founder and CEO of a London-based startup called Qatalog. Its A.I. software takes a simple prompt about the industry that a company is in and then creates essentially a set of bespoke software tools just for that business. A bit like C3.ai’s enterprise search tool, Qatalog’s software can also pull data from existing systems and company documentation. But it can then do more than just run some analytics on top of that data, it can generate the code needed to run a Facebook ad using your marketing assets, all from a simple text prompt. “We have never built software this way, ever,” Rauf says.

People are still needed in this process, he points out. But you need a lot fewer of them than before. Qatalog could enable very small teams—think just a handful of people—to do the kind of work that once would have required dozens or even hundreds of employees or contractors. “And we are just in the foothills of this stuff,” he says.

Interestingly, Qatalog is built on top of open-source language models — in this case BLOOM, a system created by a research collective that included A.I. company Hugging Face, EleutherAI, and more than 250 other institutions. (It also uses some technology from OpenAI.) It’s a reminder that OpenAI is not the only game in town. And Microsoft’s early lead and partnership with OpenAI does not mean it’s destined to win the race to create the most popular and effective generative A.I. workplace productivity tools. There are a lot of other competitors circling and scrambling for marketshare. And right now it is far from clear who will emerge on top.

Finally, I also spent some time this week with Nicole Eagan, the chief strategy officer at the cybersecurity firm Darktrace, and Max Heinemeyer, the company’s chief product officer. For Fortune’s February/March magazine cover story on ChatGPT and its creator, OpenAI, I interviewed Maya Horowitz, the head of research at cybersecurity company Check Point, who told me that her team had managed to get ChatGPT to craft every stage of a cyberattack, starting with crafting a convincing phishing email and proceeding all the way through writing the malware, embedding the malware in a document, and attaching that to an email. Horowitz told me she worried that by lowering the barrier to writing malware, ChatGPT would lead to many more cyberattacks.

Darktrace’s Eagan and Heinemeyer share this concern — but they point to another scary use of ChatGPT. While the total number of cyberattacks monitored by DarkTrace has remained about the same, Eagan and Heinemyer have noticed a shift in cybercriminals’ tactics: The number of phishing emails that rely on trying to trick a victim into clicking a malicious link embedded in the email has actually declined from 22% to just 14%. But the average linguistic complexity of the phishing emails Darktrace is analyzing has jumped by 17%.

Darktrace’s working theory, Heinemeyer tells me, is that ChatGPT is allowing cybercriminals to rely less on infecting a victims’ machine with malware, and to instead hit paydirt through sophisticated social engineering scams. Consider a phishing email designed to impersonate a top executive at a company and flagging an overdue bill: If the style and tone of the message are convincing enough, an employee could be duped into wiring money to a fraudster’s account. Criminal gangs could also use ChatGPT to pull off even more complex, long-term cons that depend on building a greater degree of trust with the victim. (Generative A.I. for voices is also making it easier to impersonate executives on phone calls, which can be combined with fake emails into complex scams—none of which depend on traditional hacking tools.)

Eagan shared that Darktrace has been experimenting with its own generative A.I. systems for red-teaming and cybersecurity testing, using a large language model fine-tuned on a customer’s own email archives that can produce incredibly convincing phishing emails. Eagan says she recently fell for one of these emails herself, sent by her own cybersecurity teams to test her. One of the tricks—the phishing email was inserted as what appeared to be a reply in a legitimate email thread, making detection of the phish nearly impossible based on any visual or linguistic cues in the email itself.

To Eagan this is just further evidence of the need to use automated systems to detect and contain cyberattacks at machine speed, since the odds of identifying and stopping every phishing email have just become that much longer.

Phishing attacks on steroids; analytics built on the fly on top of summary replies to search queries; bespoke software at the click of a mouse. The future is coming at us fast.

Before we get to the rest of this week’s A.I. news, a quick correction on last week’s special edition of the newsletter: I misspelled the name of the computer scientist who heads JPMorgan Chase’s A.I. research group. It is Manuela Veloso. I also misstated the amount the bank is spending per year on technology. It is $14 billion, not $12 billion. My apologies.

And with that, here is the rest of this week’s A.I. news.

Jeremy Kahn

@jeremyakahn

jeremy.kahn@fortune.com

A.I. IN THE NEWS

OpenAI launches a ‘universal’ A.I. writing detector. The company behind ChatGPT has said it has created a system that can automatically classify writing as likely written by an A.I. system, even ones other than its own GPT-based models. But OpenAI warned that its classifier is not very reliable when analyzing texts of less than 1,000 words—so good luck with those phishing emails. And even on longer texts its results were not fantastic: the classifier correctly identified 26% of A.I. written text while incorrectly labeling about 9% of human-written text as “likely written by an A.I.” The company had previously unveiled a classifier that was much better at identifying text written by its own ChatGPT system, but which did not work for text generated by other A.I. models. You can read more about the classifier on OpenAI’s blog and even try it out yourself.

U.S.-EU sign A.I. agreement. The U.S. and European Union have signed an agreement to work together to increase the use of A.I. in agriculture, healthcare, emergency response, climate forecasting, and the electric grid, Reuters reported. An unammed senior U.S. official told the news service that the two powers would work together to build joint models on their collective data, but without having to actually share the underlying data with one another, which might run afoul of EU data privacy laws. Exactly how this would be accomplished was not stated, but it is possible that privacy-preserving machine learning techniques, such as federated learning, might be used.

BuzzFeed to use ChatGPT for quizzes and some personalized content. The online publisher’s CEO Jonah Peretti said he intended for A.I. to play a larger role in the company’s editorial and business operations, The Wall Street Journal reported. In a memo seen by the newspaper, Peretti told BuzzFeed staff that the technology would be used to create personalized quizzes and some other personalized content, but that the company’s news operation would remain focused on human journalists.

Google creates a text-to-music generative A.I. The company said it had created an A.I. system called MusicLM that can generate long, high-fidelity tracks in almost any genre from text descriptions of what the music should sound like. But, according to a story in Tech Crunch, the music produced isn’t flawless, with sung vocals a particular problem and a distorted quality to some of the tracks. Google also found that at least 1% of what the model generates is lifted directly from songs on which the A.I. system was trained, raising potential copyright issues. This is one reason Google is not releasing the model to the public currently, Tech Crunch reported.

4chan users rush to A.I. voice cloning tool to create hate speech in celebrity voices. The anything-goes site 4chan has been flooded with hateful memes and hate speech read in the voices of famous actors after users began gravitating to a new voice cloning A.I. tool released for free by the startup ElevenLabs, tech publication The Verge reported. The company said on Twitter that it was aware of the misuses of its product and that it was investigating ways to mitigate it.

EYE ON A.I. RESEARCH

DeepMind creates an A.I. software agent that demonstrates “human-like” adaptability to new tasks. The London-based A.I. research firm said it created “AdA” (short for Adaptive Agent) that uses reinforcement learning and can seemingly adapt to brand new tasks in new 3D game worlds in about as long as it takes a human to adapt to the new task. That would seem to be a major breakthrough, but it was achieved by pre-training the A.I. agent on a vast number of different tasks in millions of different environments and using a set curriculum where the agent trains on tasks that build on one another and become successively more difficult. So while the adaptability seems like a big achievement, this still doesn’t seem to be the sort of learning efficiency that human infants or toddlers exhibit. That said, it may not matter for practical use cases, since once pre-trained, one of these AdAs could take on almost any task a human can do and learn to do it relatively quickly. The key here is that the reinforcement learning community is starting to take a page out of what has worked for large language models and build foundation systems trained on a big learning corpus. You can read DeepMind’s research paper here.

FORTUNE ON A.I.

Waymo and Cruise ditched safety drivers. Now, the cars are breaking road rules and causing traffic mayhem—by Andrea Guzman

Silicon Valley is old news. Welcome to ‘Cerebral Valley’ and the tech bro morphing into the A.I. bro—by Chris Morris

A.I. chatbot lawyer backs away from first court case defense after threats from ‘State Bar prosecutors’—by Alice Hearing

Sam Altman, the maker of ChatGPT, says the A.I. future is both awesome and terrifying. If it goes badly: ‘It’s lights-out for all of us’—by Tristan Bove

BRAIN FOOD

If you don't want to be in a race, stop running so hard. The other day The New York Times reported that Google—in response to the wild popularity of OpenAI’s ChatGPT and OpenAI’s expanded partnership with Microsoft—had said in an internal company presentation that it was going to “recalibrate” its risk tolerance around releasing A.I.-enhanced products and services. In response to this report, Sam Altman, the CEO and co-founder of OpenAI, tweeted: 'recalibrate’ means ‘increase’ obviously. disappointing to see this six-week development. openai will continually decrease the level of risk we are comfortable taking with new models as they get more powerful, not the other way around.

But there’s something kind of ridiculous about Altman, who arguably forced Google into this position with his own company’s actions, turning around and throwing shade at Alphabet and its CEO Sundar Pichai for feeling like it has been penalized for being responsible and cautious and now thinking that it ought to be a little less so. Altman's protestations are like those of an elite runner in marathon who, after having made a break from the pack, then turns around to chastise another competitor for picking up the pace in order to try to reel him back in.

Altman claims to be concerned about the potential downsides of AGI—artificial general intelligence. And folks in the A.I. Safety research field who share those concerns have been warning for a while now that one of the ways we might wind up developing dangerous A.I. technology is if the various factions within the research community see themselves as engaged in a technological arms race. Altman is no doubt very aware of this.

But if you really care about this concept then what you don't do is release a viral chatbot to the entire world so your own company can gather a lot more data than anyone else while at the same time inking a multi-billion dollar commercialization deal with one of the arch-rivals of another major force in advanced A.I. research. How did Altman think Google was going to respond?!

Gloss